|

About |

About -> Performance Tests -> Stress TestsThis page has been visited 33030 times. Table of Content (hide)

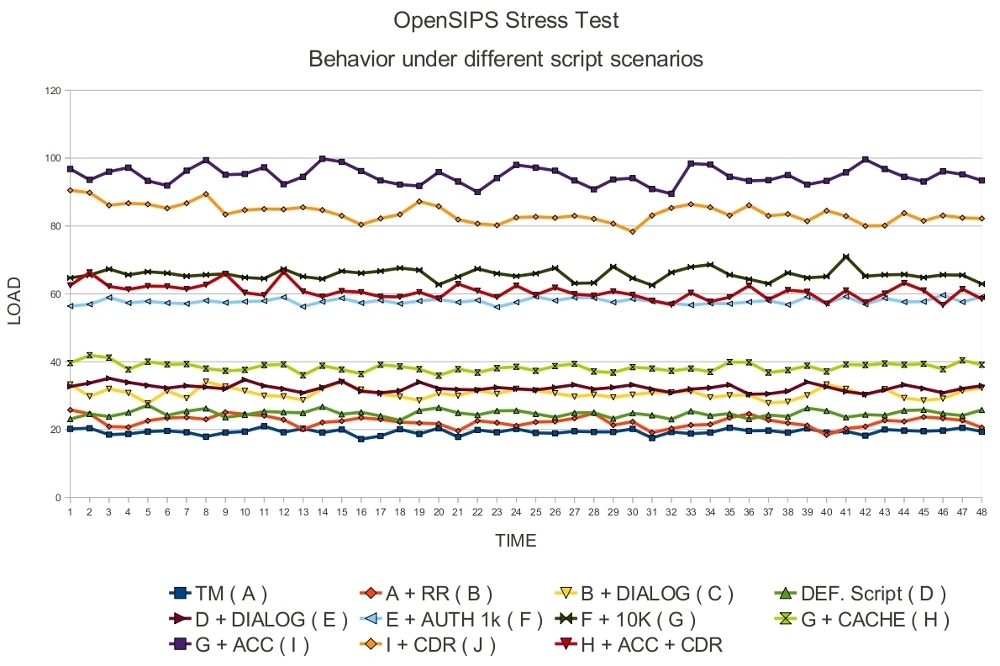

Several stress tests were performed using OpenSIPS 1.6.4 to simulate some real life scenarios, to get an idea on the load that can be handled by OpenSIPS and to see what is the performance penalty you get when using some OpenSIPS features like dialog support, DB authentication, DB accounting, memory caching, etc. 1. What hardware was used for the stress testsThe OpenSIPS proxy was installed on an Intel i7 920 @ 2.67GHz CPU with 6 Gb of available RAM. The UAS and UACs resided on the same LAN as the proxy, to avoid network limitations. 2. What script scenarios were usedThe base test used was that of a simple stateful SIP proxy. Than we kept adding features on top of this very basic configuration, features like loose routing, dialog support, authentication and accounting. Each time the proxy ran with 32 children and the database back-end used was MYSQL. 3. Performance testsA total of 11 tests were performed, using 11 different scenarios. The goal was to achieve the highest possible CPS in the given scenario, store load samples from the OpenSIPS proxy and then analyze the results. 3.1 Simple stateful proxyIn this first test, OpenSIPS behaved as a simple stateful scenario, just statefully passing messages from the UAC to the UAS ( a simple t_relay()). The purpose of this test was to see what is the performance penalty introduced by making the proxy stateful. The actual script used for this scenario can be found at here . In this scenario we stopped the test at 13000 CPS with an average load of 19.3 % ( actual load returned by htop ) See chart, where this particular test is marked as test A. 3.2 Stateful proxy with loose routingIn the second test, OpenSIPS script implements also the "Record-Route" mechanism, recording the path in initial requests, and then making sequential requests follow the determined path. The purpose of this test was to see what is the performance penalty introduced by the mechanism of record and loose routing. The actual script used for this scenario can be found here . In this scenario we stopped the test at 12000 CPS with an average load of 20.6 % ( actual load returned by htop ) See chart, where this particular test is marked as test B. 3.3 Stateful proxy with loose routing and dialog supportIn the 3rd test we additionally made OpenSIPS dialog aware. The purpose of this particular test was to determine the performance penalty introduced by the dialog module. The actual script used for this scenario can be found here . In this scenario we stopped the test at 9000 CPS with an average load of 20.5 % ( actual load returned by htop ) See chart, where this particular test is marked as test C. 3.4 Default ScriptThe 4th test had OpenSIPS running with the default script (provided by OpenSIPS distros). In this scenario, OpenSIPS can act as a SIP registrar, can properly handle CANCELs and detect traffic loops. OpenSIPS routed requests based on USRLOC, but only one subscriber was used. The purpose of this test was to see what is the performance penalty of a more advanced routing logic, taking into account the fact that the script used by this scenario is an enhanced version of the script used in the 3.2 test . The actual script used for this scenario can be found here . In this scenario we stopped the test at 9000 CPS with an average load of 17.1 % ( actual load returned by htop ) See chart, where this particular test is marked as test D. 3.5 Default Script with dialog supportThis scenario added dialog support on top of the previous one. The purpose of this scenario was to determine the performance penalty introduced by the the dialog module. The actual script used for this scenario can be found here . In this scenario we stopped the test at 9000 CPS with an average load of 22.3 % ( actual load returned by htop ) See chart, where this particular test is marked as test E. 3.6 Default Script with dialog support and authenticationCall authentication was added on top of the previous scenario. 1000 subscribers were used, and a local MYSQL was used as the DB back-end. The purpose of this test was to see the performance penalty introduced by having the proxy authenticate users. The actual script used for this scenario can be found here. In this scenario we stopped the test at 6000 CPS with an average load of 26.7 % ( actual load returned by htop ) See chart, where this particular test is marked as test F. 3.7 10k subscribersThis test used the same script as the previous one, the only difference being that there were 10 000 users in the subscribers table. The purpose of this test was to see how the USRLOC module scales with the number of registered users. In this scenario we stopped the test at 6000 CPS with an average load of 30.3 % ( actual load returned by htop ) See chart, where this particular test is marked as test G. 3.8 Subscriber cachingIn the test, OpenSIPS used the localcache module in order to do less database queries. The cache expiry time was set to 20 minutes, so during the test, all 10k registered subscribers have been in the cache. The purpose of this test was to see how much DB queries are affecting OpenSIPS performance, and how much can caching help. The actual script used for this scenario can be found here. In this scenario we stopped the test at 6000 CPS with an average load of 18 % ( actual load returned by htop ) See chart, where this particular test is marked as test H. 3.9 AccountingThis test had OpenSIPS running with 10k subscribers, with authentication ( no caching ), dialog aware and doing old type of accounting ( two entries, one for INVITE and one for BYE ). The purpose of this test was to see the performance penalty introduced by having OpenSIPS do the old type of accounting. The actual script used for this scenario can be found here. In this scenario we stopped the test at 6000 CPS with an average load of 43.8 % ( actual load returned by htop ) See chart, where this particular test is marked as test I. 3.10 CDR accountingIn this test, OpenSIPS was directly generating CDRs for each call, as opposed to the previous scenario. The purpose of this test was to see how the new type of accounting compares to the old one. The actual script used for this scenario can be found here. In this scenario we managed to achieve 6000 CPS with an average load of 38.7 % ( actual load returned by htop ) See chart, where this particular test is marked as test J. 3.11 CDR accounting + Auth CachingIn the last test, OpenSIPS was generating CDRs just as in the previous test, but it was also caching the 10k subscribers it had in the MYSQL database. In this scenario we stopped the test at 6000 CPS with an average load of 28.1 % ( actual load returned by htop ) See chart 4. Load statistics graphEach test had different CPS values, ranging from 13000 CPS, in the first test, to 6000 in the last tests. To give you an accurate overall comparison of the tests, we have scaled all the results up to the 13 000 CPS of the first test, adjusting the load in the same time. So, while on the X axis we have the time, the Y axis represents a function based on actual CPU load and CPS.  Test naming convention: Each particular test is described in the following way : [ PrevTestID + ] Description ( TestId ). Example: A test adding dialog support on top of a previous test labeled as X would be labeled : X + Dialog ( Y ) See full size chart

5. Performance penalty table

6. Conclusions

|